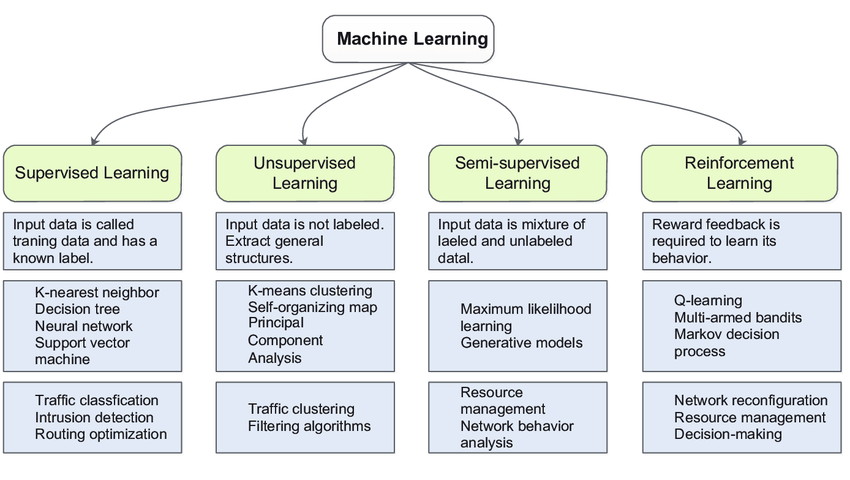

Machine learning is a branch of artificial intelligence (AI) and computer science which focuses on the use of data and algorithms to imitate the way that humans learn, gradually improving its accuracy.

Machine learning is an important component of the growing field of data science. Through the use of statistical methods, algorithms are trained to make classifications or predictions and uncover important insights in data mining projects.

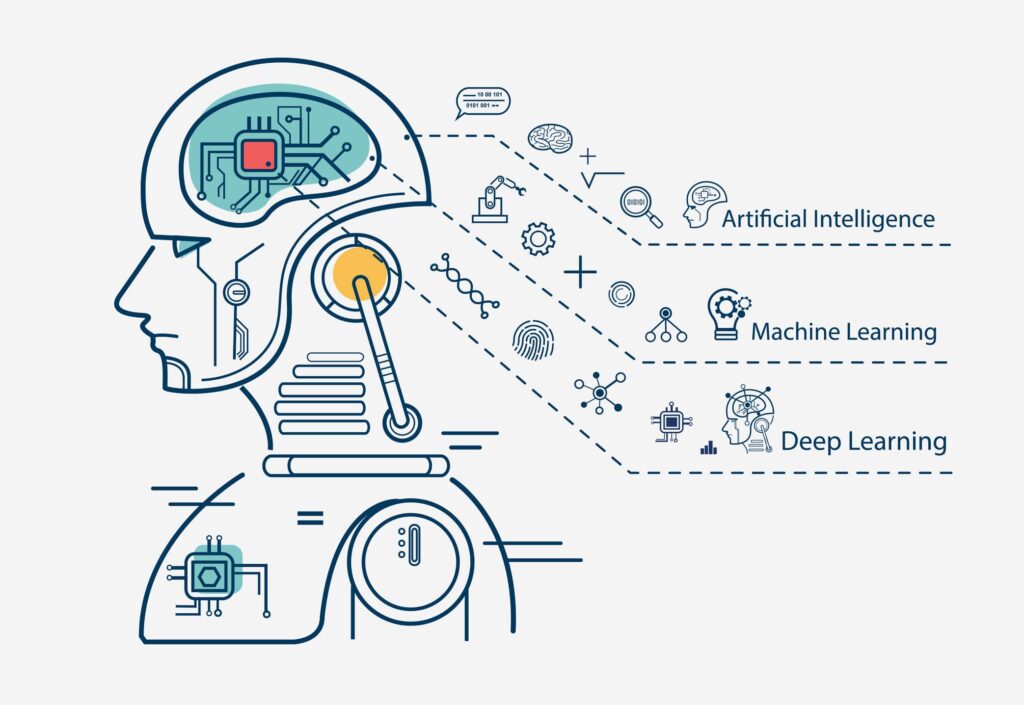

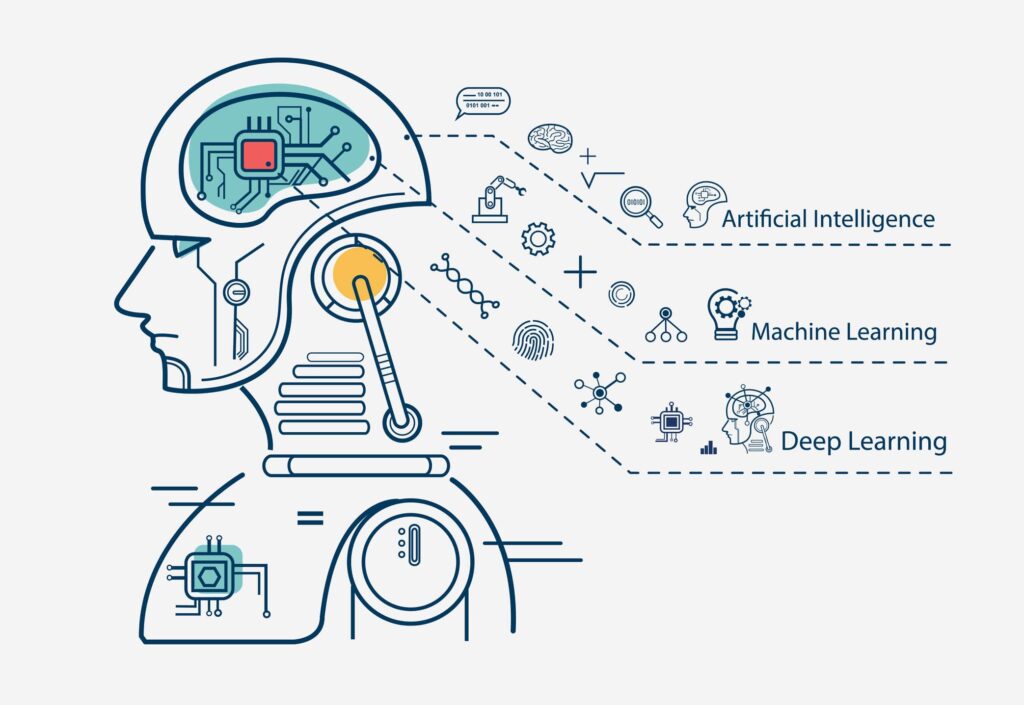

• Machine Learning vs. Deep Learning vs. Neural Networks

Since the terms deep learning and machine learning are used interchangeably, it is worth noting the nuances between the two. Machine learning, deep learning, and neural networks are all subfields of artificial intelligence. However, neural networks are actually a subfield of machine learning, and deep learning is a subfield of neural networks.

Classical, or “non-deep”, machine learning is more dependent on human intervention to learn. Human experts determine the set of features to understand the differences between data inputs, usually requiring more structured data to learn.

Deep learning and neural networks are credited with accelerating progress in areas such as computer vision, natural language processing, and speech recognition.

Neural networks, or artificial neural networks (ANNs), are comprised of node layers, containing an input layer, one or more hidden layers, and an output layer. Each node, or artificial neuron, connects to another and has an associated weight and threshold.

• How machine learning works

A Decision Process: In general, machine learning algorithms are used to make a prediction or classification. Based on some input data, which can be labeled or unlabeled, your algorithm will produce an estimate about a pattern in the data.

An Error Function: An error function evaluates the prediction of the model. If there are known examples, an error function can make a comparison to assess the accuracy of the model.

A Model Optimization Process: If the model can fit better to the data points in the training set, then weights are adjusted to reduce the discrepancy between the known example and the model estimate. The algorithm will repeat this “evaluate and optimize” process, updating weights autonomously until a threshold of accuracy has been met.

• Common machine learning algorithms

A number of machine learning algorithms are commonly used. These include:

- Neural networks: Neural networks simulate the way the human brain works, with a huge number of linked processing nodes. Neural networks are good at recognizing patterns and play an important role in applications including natural language translation, image recognition, speech recognition, and image creation.

- Linear regression: This algorithm is used to predict numerical values, based on a linear relationship between different values. For example, the technique could be used to predict house prices based on historical data for the area.

- Logistic regression: This supervised learning algorithm makes predictions for categorical response variables, such as“yes/no” answers to questions. It can be used for applications such as classifying spam and quality control on a production line.

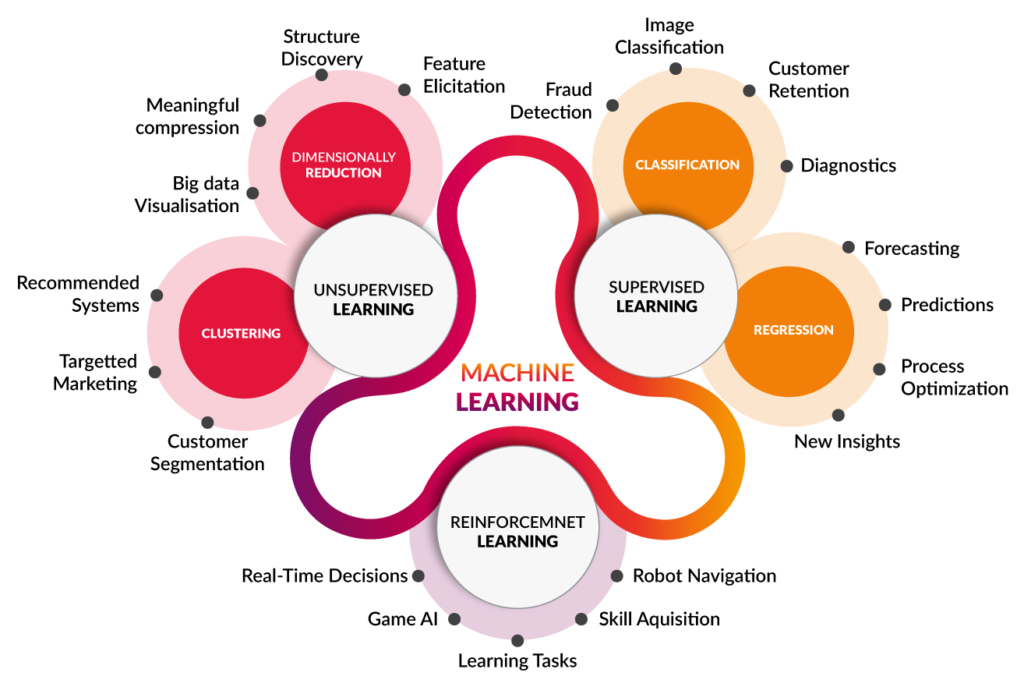

- Clustering: Using unsupervised learning, clustering algorithms can identify patterns in data so that it can be grouped. Computers can help data scientists by identifying differences between data items that humans have overlooked.

- Decision trees: Decision trees can be used for both predicting numerical values (regression) and classifying data into categories. Decision trees use a branching sequence of linked decisions that can be represented with a tree diagram. One of the advantages of decision trees is that they are easy to validate and audit, unlike the black box of the neural network.

- Random forests: In a random forest, the machine learning algorithm predicts a value or category by combining the results from a number of decision trees.

• Challenges of machine learning

As machine learning technology has developed, it has certainly made our lives easier.

Technological singularity

While this topic garners a lot of public attention, many researchers are not concerned with the idea of AI surpassing human intelligence in the near future. Technological singularity is also referred to as strong AI or superintelligence. Philosopher Nick Bostrum defines superintelligence as “any intellect that vastly outperforms the best human brains in practically every field, including scientific creativity, general wisdom, and social skills.”

AI impact on jobs

While a lot of public perception of artificial intelligence centers around job losses, this concern should probably be re framed. With every disruptive, new technology, we see that the market demand for specific job roles shifts.

IF YOU LIKE THE POST THEN FOLLOW US :

Email us on:

Contact us on:

Landline no:+911353558143

Mobile no:

+919808724448

Facebook id:

Linkedin id:

Website:

For these type knowledge contact us :

Digi Hub Solution

Digital Service Provider